Core innovations of AutoGPT

AutoGPT (repo) went viral on Github and looks impressive on Twitter, but almost never works. In the process of trying to improve it I dug into how it works. Really there are two important parts to AutoGPT: a plan-and-execute workflow, and looped Chain-of-thought (CoT).

Plan-and-execute

Say the user input is "Find one funny News Story from last week and compose a tweet about it."

A simple Agent would go ahead and start executing on it. In AutoGPT, a planning step precedes that. So the input to the looped CoT would not be the raw user input; rather it is a list of subgoals or steps needed to achieve the goal:

1. Search for funny news stories from last week. 2. Select one story and summarize it. 3. Compose a tweet about the selected story.

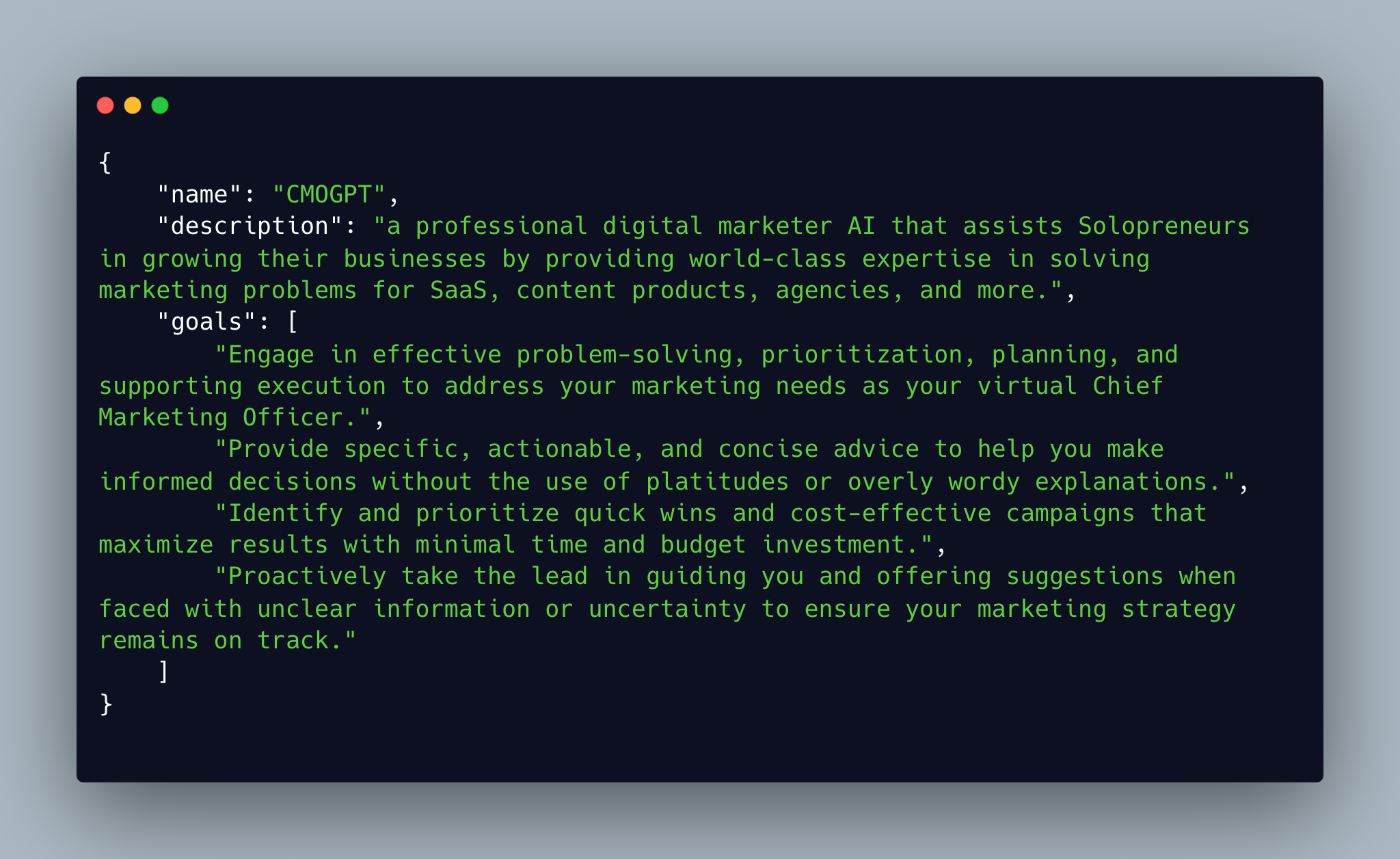

The full prompt for generating the goals is a bit too long and boring to reproduce, but the in-context example looks like this:

Empirically this seems to get better results (although I have a hunch that a better CoT loop prompt would remove the need for a separate planning step).

Looped chain of thought

Instead of running a pre-determined set of steps, AutoGPT (and in fact, all Agents!) run a prompt in a loop. Literally, a while-loop.

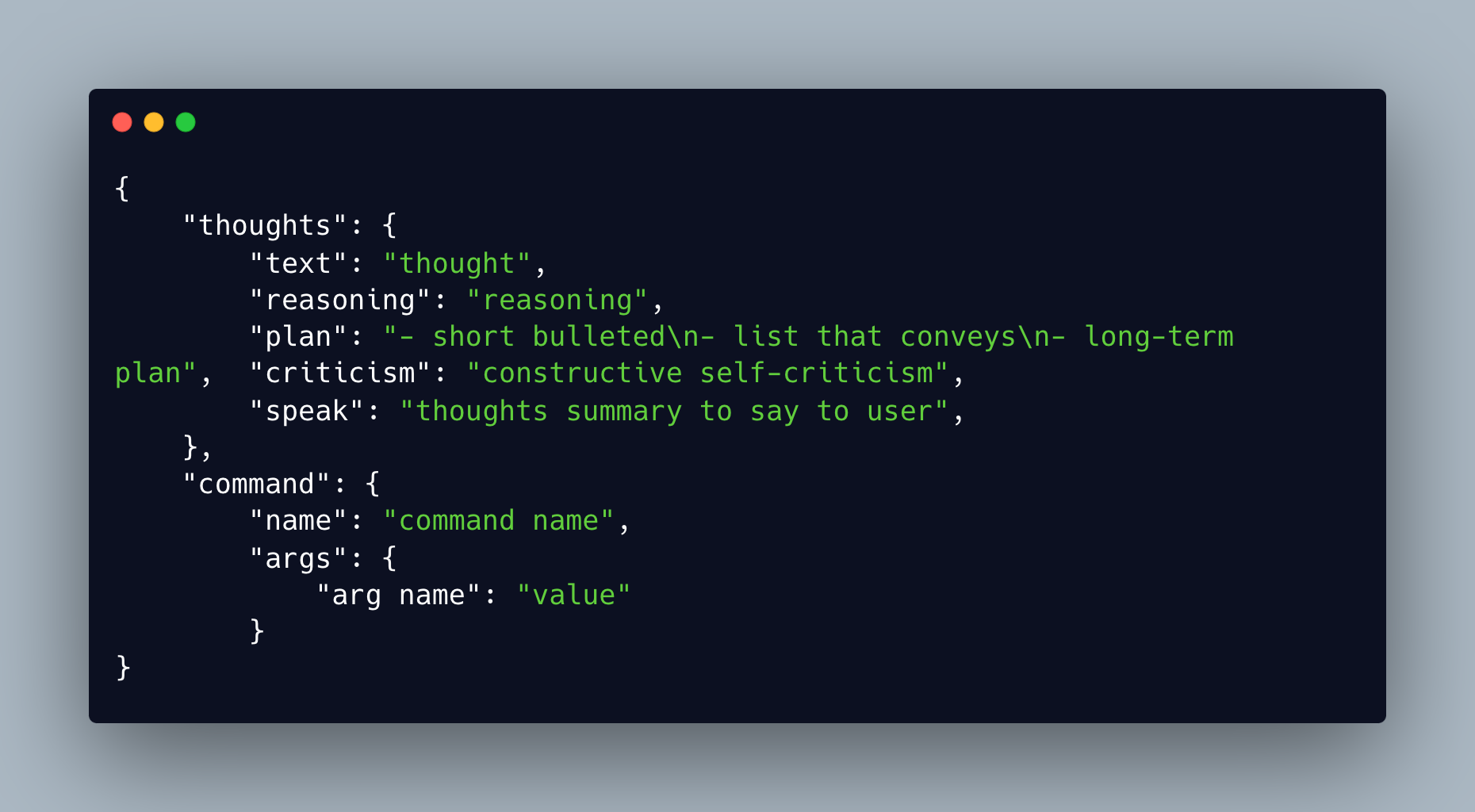

In each step of the loop, the agent gets a lot of context (including the sub-goals and historical messages) and is asked to output thoughts in the following format:

Both of the above ideas can be heavily improved by Prompt engineering to make the agent more productive -- mostly by removing stuff. But the core ideas remain valuable on their own.