stream

Creating single-file apps with LLMs

To prototype a mobile-optimized writing app I used LLMs as code generators. The idea isn't new but I got inspiration from reading Simon Willison's experiments creating micro-UIs. The idea is to prompt for whatever functionality you need, and ask for a single HTML file containing CSS and JS as needed. This makes copy-pasting and local testing easy and the format can be easily hosted on any web server (even Github Pages). Plus, unlike modern frontend frameworks, I don't have to deal with a mess of tens of files and five steps of compilation.

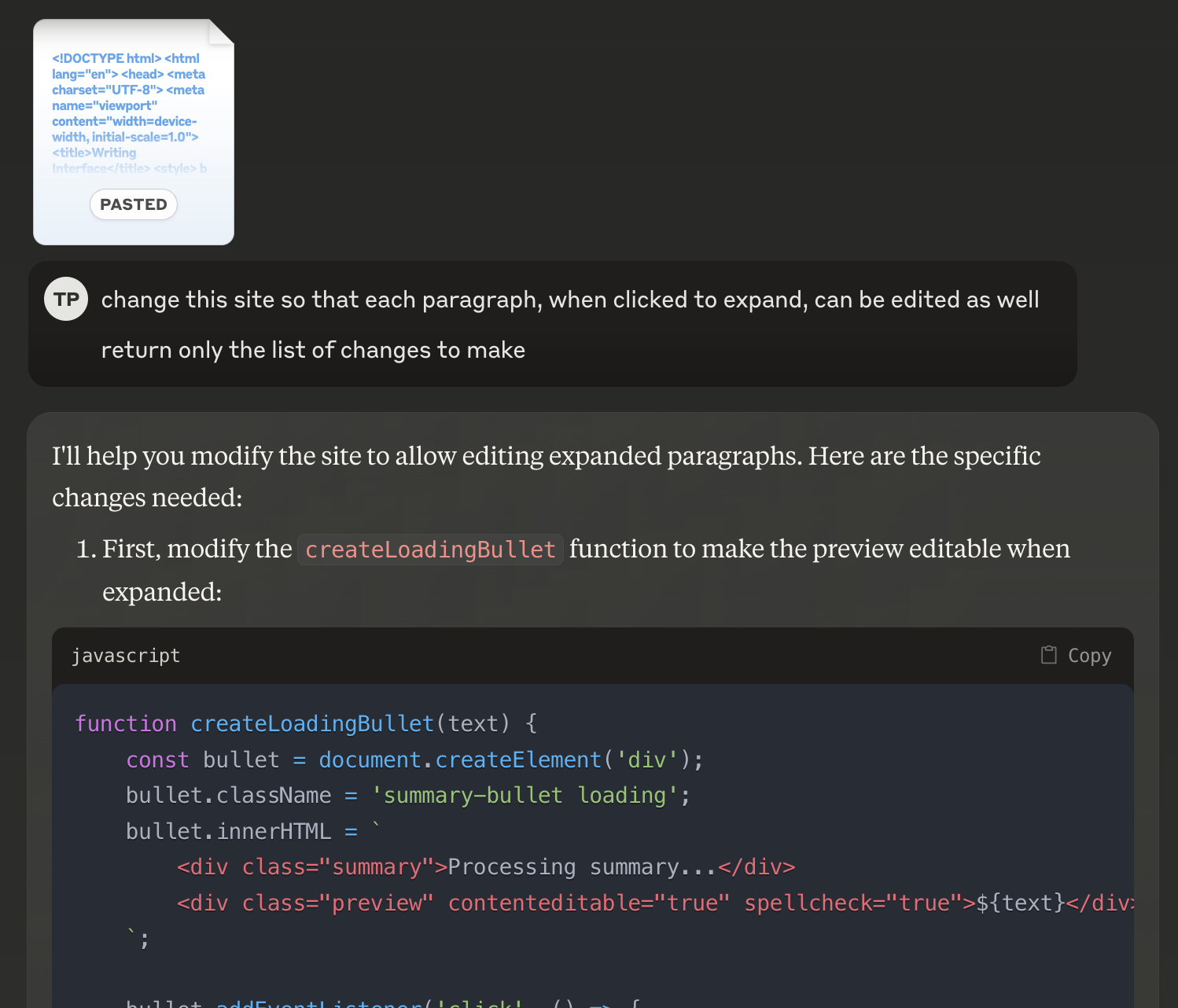

The overall experience is addictive. I started off with Claude which has native support for this sort of thing: chat is shown on the left while the preview and code are shown on the right. I was delighted that Claude supports this workflow natively, and in a matter of minutes I had the core functionality in place. But then I annoyingly hit the max-generated-tokens limit, because the entire file was rewritten every time. I worked around by asking only for the diff. More manual work from me made it slightly less efficient, but even so I was able to make progress 20x faster than writing code from scratch myself, even with copilot.

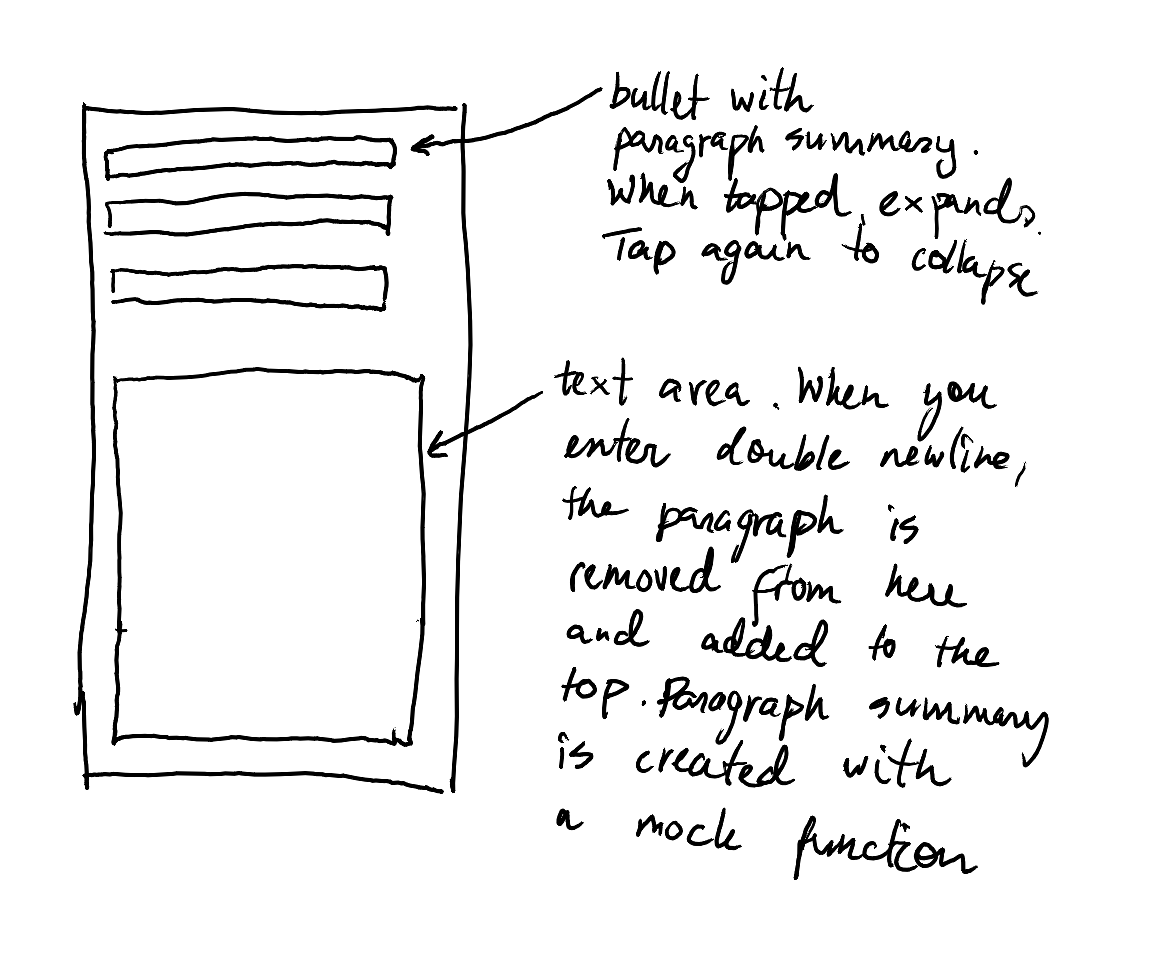

The very first version of the app started as the sketch above, drawn already with the intention to feed it into Claude. Then, as I tested the app, I asked for more features and modifications, very rarely mentioning technical implementation details. At some point I wanted to start actually testing on mobile, so I created a Github Pages repo to deploy the app - and about 3 minutes later I was able to continue development while testing on my phone.

I switched to ChatGPT for a moment, hoping for a longer context window, which did help. But ChatGPT has worse UX for working with pasted content and outputting files, so I stayed with Claude which seemed fine.

Some technical decisions I made myself, like using browser local storage instead of any backend storage. Others I was delighted to see Claude make better than I would, like using "created at" timestamp as a unique ID for each post. And with most features, there was no struggle and I didn't have to intervene.

But at some point the technical complexity grew out of hand. Global state was subtly changed in multiple far-away functions, and the LLM started missing this. Several bugs I had to figure out manually for this reason, and my involvement grew over time.

So blind prompting only takes you so far. If I built this as a serious product, now would be a time to reconsider some key choices and refactor quite a bit (possibly moving away from the huge single-file setup, and decoupling the different components a bit). LLMs can probably help with this too, but I expect it'd take more handholding to walk through this.

Overall though I like this workflow for throwaway apps. The time it took me to get from zero to first functioning prototype was measured in minutes. The entire app was feature complete in a couple hours, including lots of testing. I love that I now have a way of quickly throwing together frontends -- not a part of stack I feel fluent in.

At the same time I feel the computer science and general software (and bits of frontend) knowledge I do have, actually give me a significant advantage. I can cancel bad decisions (like switching everything to React just to add a small feature) and make technical choices that improve future maintainability. So I am not afraid. The LLM here complements me and does not replace.