Gemini 2.5 Pro isn't quite Claude 3.7 at coding

Gemini 2.5 Pro was just released and it could be a big deal, if its coding abilities pan out.

The current positioning of Gemini has been roughly that it is a tad behind the OpenAI/Anthropic models of the same class, and far behind the coding capabilities of Claude 3.7 Sonnet which is considered unmatched for practical engineering tasks right now. But at the same time, Gemini is generally more than 10x cheaper with higher rate limits, and is good enough for many tasks -- which makes it still a workhorse at many LLM tasks in general, and at Pactum specifically.

What I will be looking out for is how well Gemini 2.5 Pro will work as a coding model, in Cursor, Aider and other similar tools. If it is close to Claude 3.7 in coding task quality and pricing stays similar (Gemini 2.5 Pro pricing will be announced soon), that's a huge deal. And from the benchmarks it Gemini could come close, though not yet exceed, state of the art.

I threw a simplified example into several models (it's the core concept of something I vibe-coded up a few months back) and here are the results.

The prompt was:

create a single-page HTML app (css, js, html stand-alone) that has a single textbox, and when you enter triple newline, it turns that paragraph into a bullet above the textbox. use beautiful bootstrap styling, minimal white theme

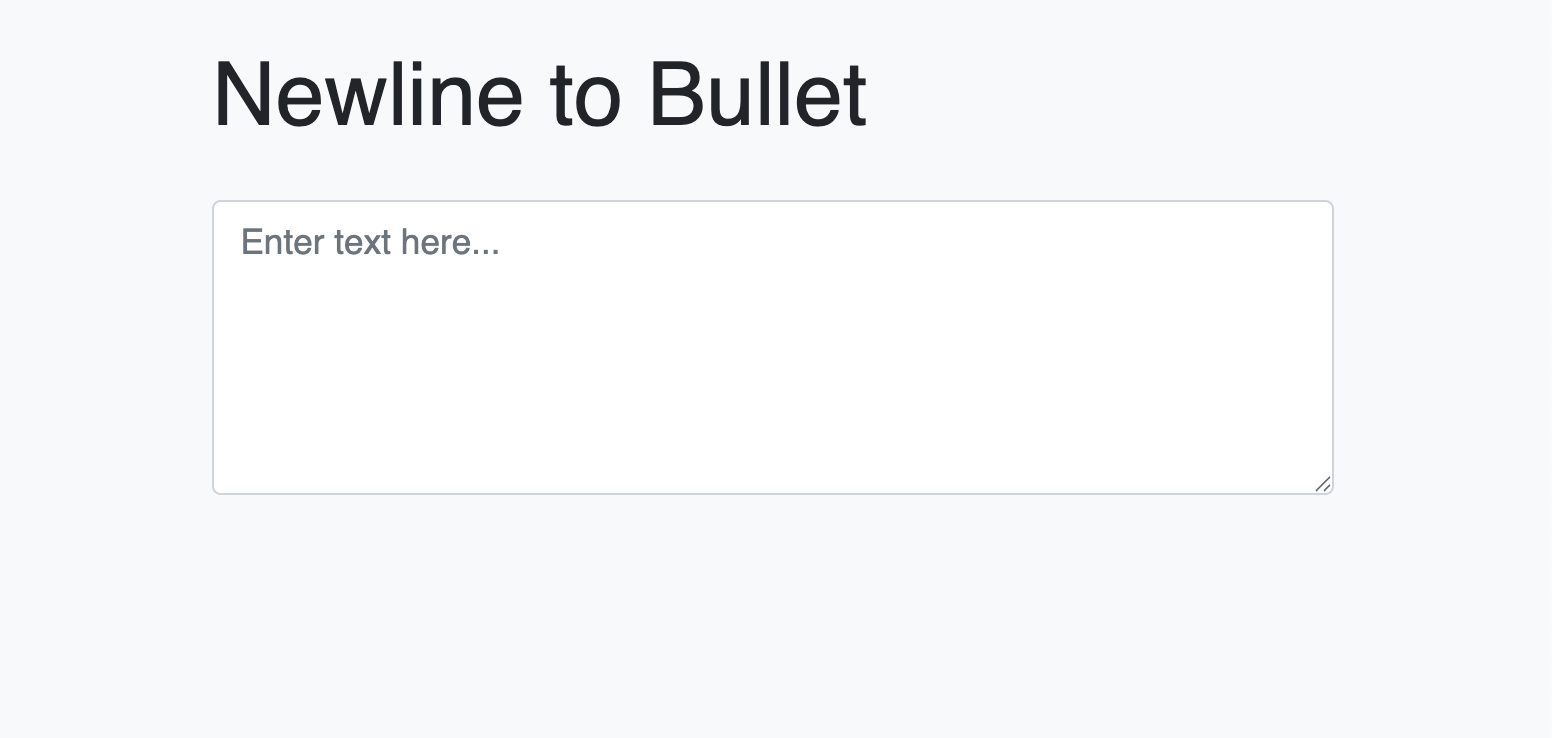

Here's what I got back from Gemini 2.0 Flash (released a few months ago, and not the strongest of the Gemini family back then) -- it doesn't even fulfil its core function:

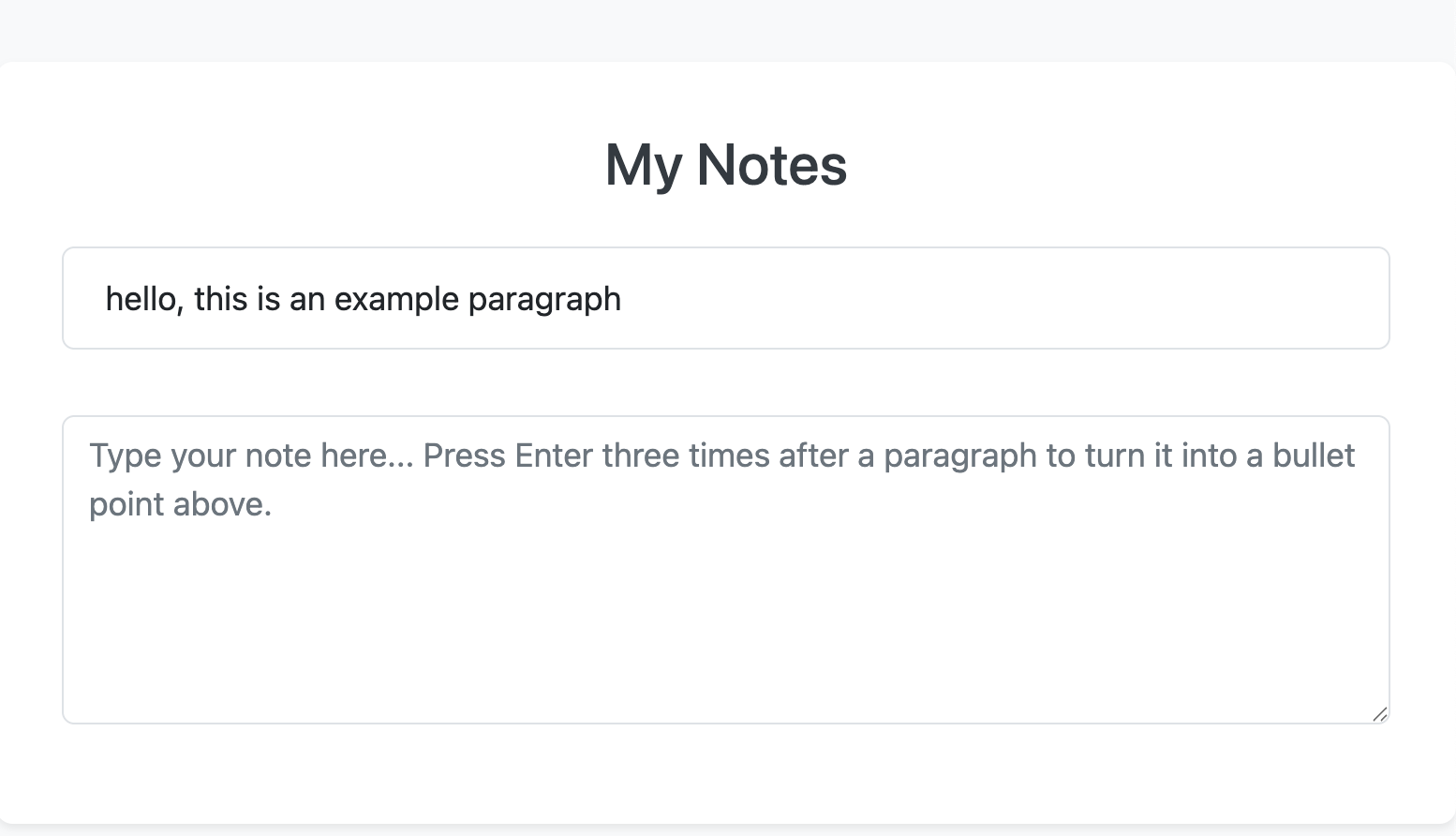

Here's Gemini 2.5 Pro, functional but not the best looking:

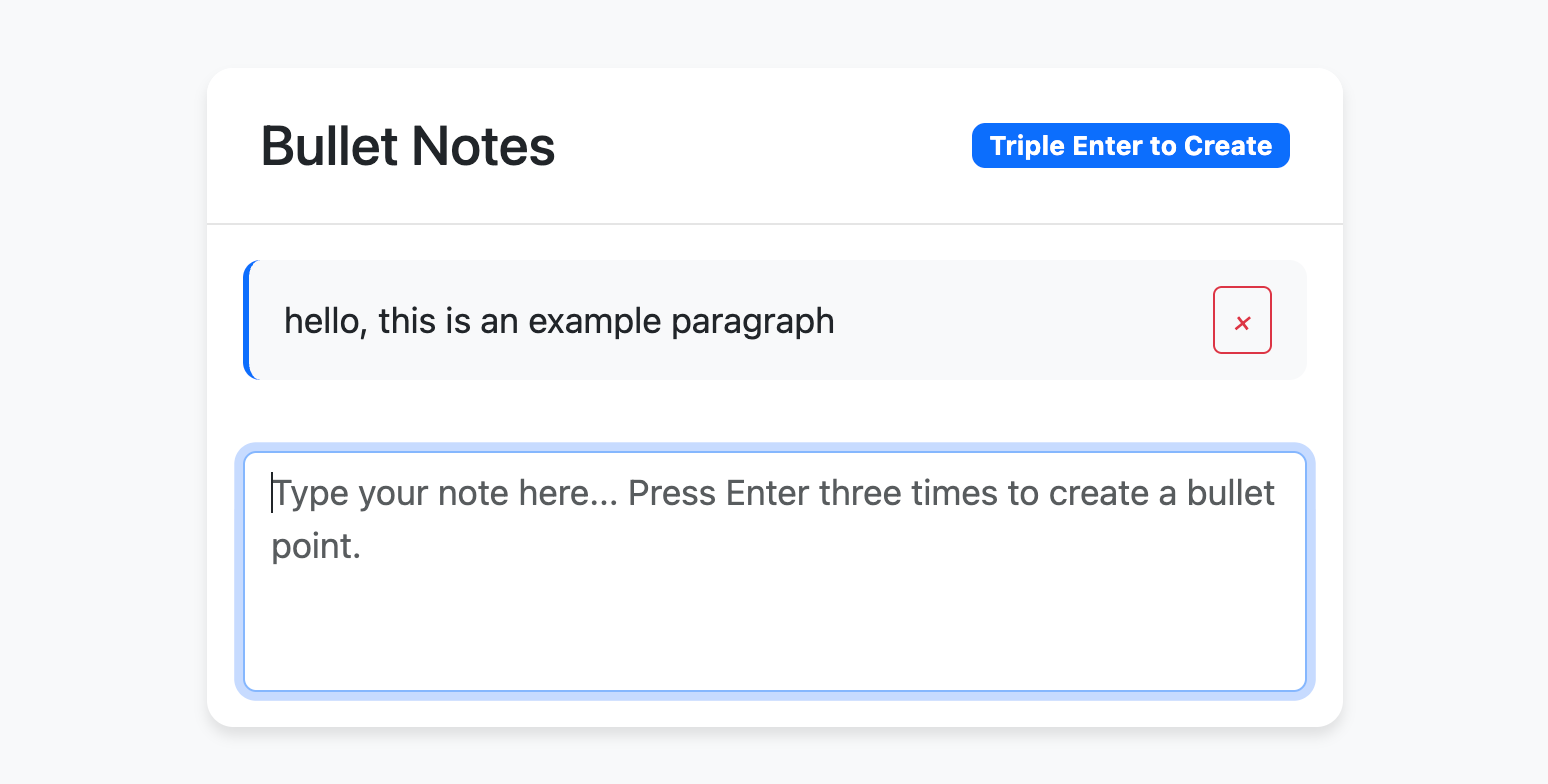

And here's Claude 3.7 Sonnet, which added a few nice touches like a "delete" button, and generally looks best:

Off of this highly scientific test, there still seems to be a gap between 2.5 Pro and 3.7 Sonnet. But to be more confident, I'd need to try it out in Cursor when it becomes available.