Building automation-heavy products

This post is based on my talk at North Star AI 2019.

When I speak about automation in general, and the Automation team at Veriff, I mean it in a specific sense of the word.

Building software to perform typically-human tasks…

whose solutions usually cannot be perfectly described in code.

An example of an automation task is building software to find all faces in a photo. A 100x100-pixel grayscale image can be represented as a two-dimensional table with values ranging from 0.0 to 1.0. It is very difficult to write a program that finds out where faces are in this table. As far as I know, nobody has been able to write a program like this from scratch.

A counter-example is building a continuous integration pipeline: a tool that automates the process of building source code into a package and deploying it onto a server. I don’t think of this as automation because each step can be perfectly described and summarised into a script. The script is guaranteed to do what it is intended to do, except for any bugs made when developing it.

From our experience building automation at Veriff, here are three tips to help you to do the same in your domain as quickly as possible.

1. Define your unit of automation

The framing of your automation problem is important. A natural way to do this is to figure out what your units of automation are. I’ve written a whole blog post on this concept; the gist is that you want to figure out what is the smallest recurring piece of the human task, and measure against that.

For the identity verification product at Veriff, the unit of automation is one verification: the whole set of images and other data that a user submits when verifying themselves. This unit is useful in two ways:

- KPI: we can calculate a high-level metric of how well we are doing: yesterday’s automation rate is the proportion of units processed without any human involvement, out of all units that were processed yesterday. If yesterday we made eight verification decisions, and five of them were automatic, the automation rate is 5 / 8 = 62.5%.

- Prioritisation: we can look at the three non-automatic decisions from yesterday and figure out what we need to build or improve to automate these decisions as well. Suppose two out of the three needed a manual decision because we were unable to figure out which document was shown in the image; in this case building a perfect document classifier would reduce human work threefold.

If the decision process has multiple stages, you can measure automation rate individually for each stage. Suppose the first step of verifying a person is making sure none of three user-submitted images are blurred. Here the natural unit of automation is one image, not one verification: all images except one may be perfectly sharp, so labelling the whole verification as blurry would not be useful.

In a second step, when making the final decision, the unit of automation is again one verification: the decision is made for the verification as a whole, not single images. Breaking the decision into stages is very dependent on the specifics of the decision, and is an important architectural choice when building automation. Unfortunately I don’t know of a good principled way of making this choice. So far it has seemed fairly obvious once I’ve started actual work on the problem.

2. Stay algorithm-agnostic

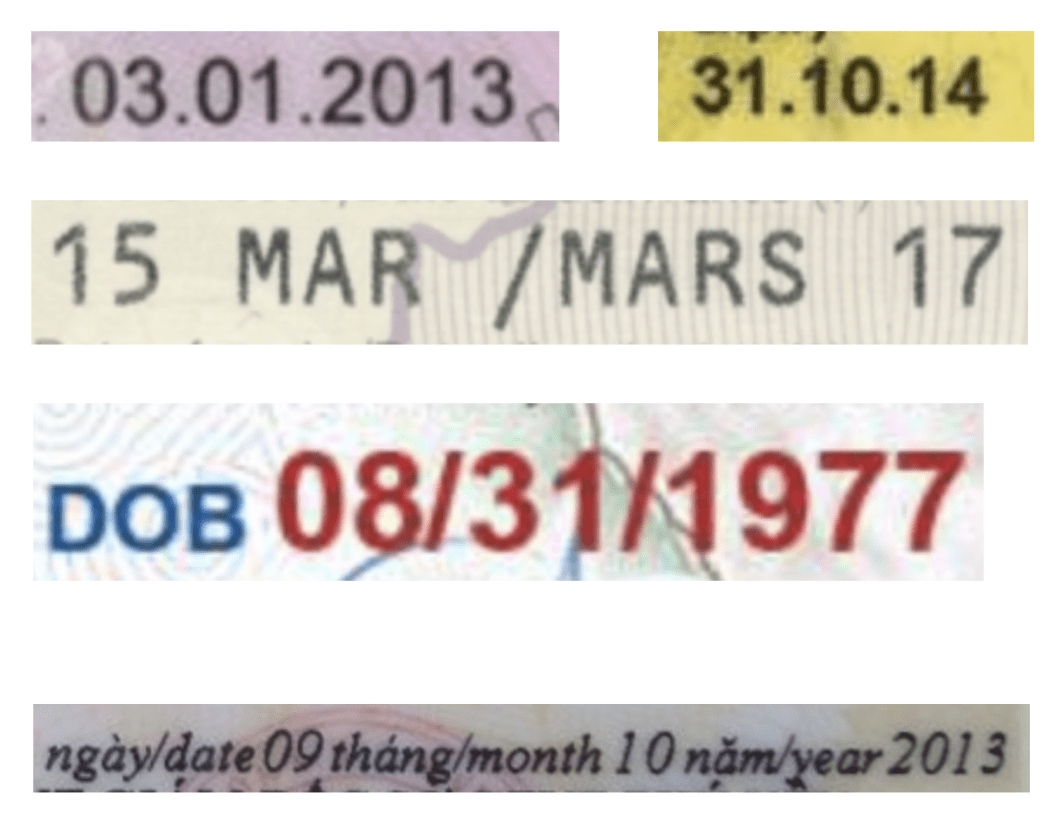

The image above shows date formats on various documents. From top left: British and Australian driver’s licenses, British passport, Californian driver’s license, and Vietnamese driver’s license. Each of these is in a different format, and some are very annoying to machine-read especially when some of the characters are incorrectly detected in OCR.

How to parse them into a common date format? You could imagine training a neural network to do this. You could also imagine a trivial solution: using a regular expression like (\d\d).(\d\d).(\d\d)?(\d\d).

Your decision about which one to use should be empirical: what is the distribution you are working on, and what percentage of it does the simple regex approach solve? Simple hand-coded solutions are surprisingly often good enough; starting from the easiest thing to implement (and then improving it if performance is not good enough) saves a huge amount of time.

Similarly, detecting low image quality (due to blurriness, noisiness, darkness, or any other cause) may be difficult to do generally. An 80-20 solution if you already run OCR on each image: count the number of characters detected; if it is too low then classify it as low quality. This “model” can be expressed in two short lines of SQL and tested in about as many, so prototyping this solution is less than an hour’s work.

3. Build a final decisionmaker

A complex automatic decision consists of many sub-decisions, each of which can be addressed by a separate algorithm. At some point you will want to run the sub-decisions as separate services, and run them asynchronously for cost, latency, or other reasons. How do you make the final decision then?

You could give every component service the power to make a final decision. For example, finding that all user-submitted images consist of 100% black pixels due to some technical error is an obvious cause for rejection, regardless of the output from a text extraction service running in parallel. This distributed decisionmaking power, however, can come back to bite you: race conditions are inherent in this kind of a system, and making the final decision dependent on the order of sub-decisions makes debugging much harder.

A better approach is to build a final decisionmaker: a single point of decision that aggregates all component decisions and has the sole power to make final decisions. I realise this sounds like the anti-pattern of “single point of failure” but this also gives you a single point of debugging, backtesting, and handling edgecases and missing data.

This is the high-level algorithm for building automation. First, make sure you know your unit of automation, and from there the top-level KPI and everyday problem prioritisation follow. Second, stay algorithm-agnostic in how you solve these problems, and you’ll find they are easier than expected. Finally, build all of these components into a final decisionmaker to make development and maintenance of these statistical systems much easier.